Last week I had the pleasure of interviewing Byron Southern, a theoretical physicist and professor of quantum mechanics at the University of Manitoba. With a philosophy and psychology background, I felt out of my element interviewing a physicist, but he did a great job of filling in the gaps. What I discovered was that an understanding of quantum mechanics — the theory of why atoms and subatomic particles behave the way they do — is at the basis of most things dealing with conduction of charge. In other words, the workings of every electronic device with transistors, from a Sega Genesis to a calculator wristwatch, rely on the principles of quantum mechanics.

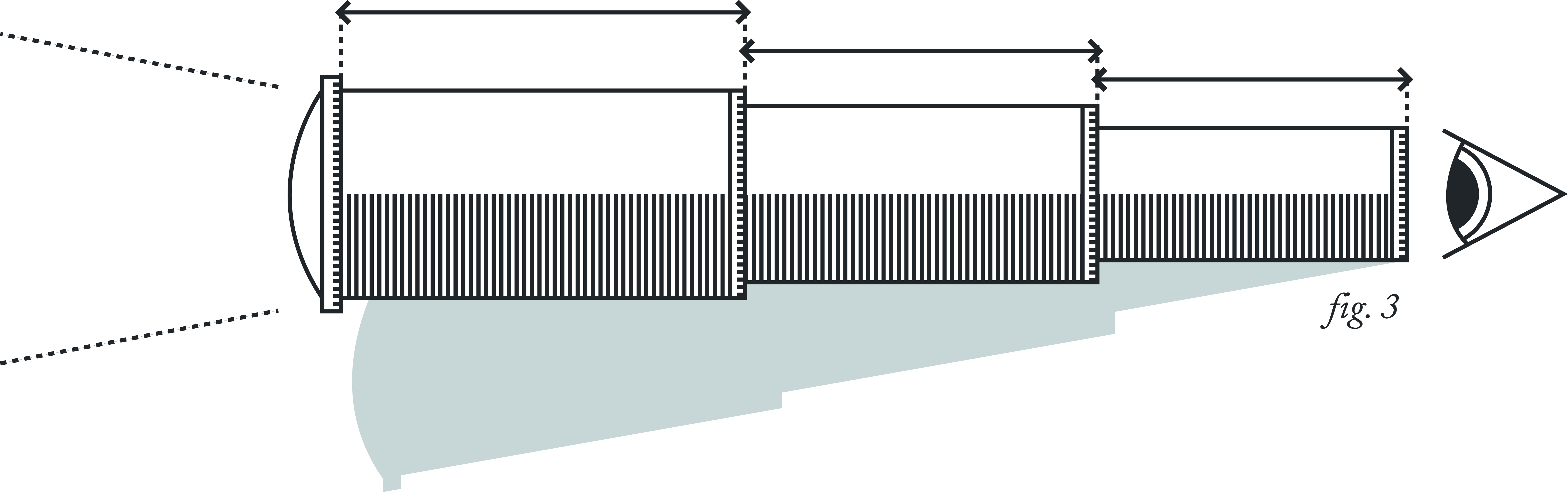

Part of the problem in knowing the potential benefits of basic research in quantum phenomena, as highlighted by Southern, is that its practical applications are rarely immediately realized. In the case of Einstein’s discovery of stimulated emission, which laid the groundwork for the development of the laser, the application didn’t come about until 43 years after the original research. However, according to Southern, “When you look at the laser now, you can’t get away from it. [Any] electronic device that reads cards uses it.” It’s in everything from bar code readers to DVD players, but at the time, it was just a discovery about the interaction of atomic particles.

Sometimes, even when we have the application, the full potential of the application is not immediately realized. Thomas Watson Sr., the former president of IBM for 40 years said, “I think there is a world market for maybe five computers.” According to a report by Forrester Research, the estimate for the number of computers in use at the end of 2008 was over one billion.

The ubiquitous presence and importance of computers highlights the need for faster information processing technology, however, we are approaching the limits of what traditional processors can accomplish. In Richard Dawkins’ The Greatest Show on Earth: The Evidence For Evolution, he describes, in detail, the principle of Moore’s Law which involves the miniaturization of transistors, and points out that for the past 30 years, transistors have been getting exponentially smaller, a trend that continues to hold true. According to the Institute for Quantum Computing (IQC) based at Waterloo University, in 10 to 15 years, transistors will become so small that they approach the size of atoms. When this happens, classical physics will be ill-equipped to explain the behaviour of transistors, and a greater understanding of quantum mechanics will be needed to exploit their potential.

Classical physics, according to Southern, operates under the assumption that we can “know both the position and velocity of a particle as accurately as we can measure them,” meaning that our ability to measure things is only limited by our instruments. Conversely, quantum mechanics states that it’s impossible to know both the position and velocity of a particle at the same time. The theory describing this quirk of quantum mechanics is more commonly known as the Heisenberg uncertainty principle.

In traditional computing, bits, which exist in either an on (one) or off (zero) position, store information as a series of ones and zeros, also known as binary code. The quantum bit (qubit), can exist as either a one, zero or a superposition of both. It’s the possibility of this superposition state that leads to a potential increase in computing power, since the “binary alphabet” in a quantum computer has the ability to be more complex than a series of ones and zeros.

The IQC recently demonstrated the potential of quantum computing by using a single qubit to solve a complex physics problem that had proven difficult for classical computers to handle. Gina Passante, lead author of a recent paper in Physical Review Letters, described their find saying that work “is an important stepping stone to realizing larger quantum computers which will solve exciting problems and have countless applications.”

It’s hard to imagine a more significant contribution to technology at this juncture than an exponential increase in computing power. This could have significant ramifications for fields such as engineering, medical technology and space exploration. Currently our understanding of complex biological processes — like the 3D structure of proteins, which is vital to understanding diseases such as Alzheimer’s, Parkinson’s and Cancer — is limited by computing power. Although distributed computing efforts like Folding@Home

create faux supercomputers by linking thousands of home computers via the Internet, quantum computing could create monumental improvements in information processing that could solve these problems quickly and easily.